Does the counter UAS system you bought work? Does it actually solve your problem?

Or, in marketing speak – CUAS vendors must be able to demonstrate in a quantifiable manner how their systems perform in a variety of environments, against a range of targets and flight profiles, and over time as hardware, software, and configurations evolve.

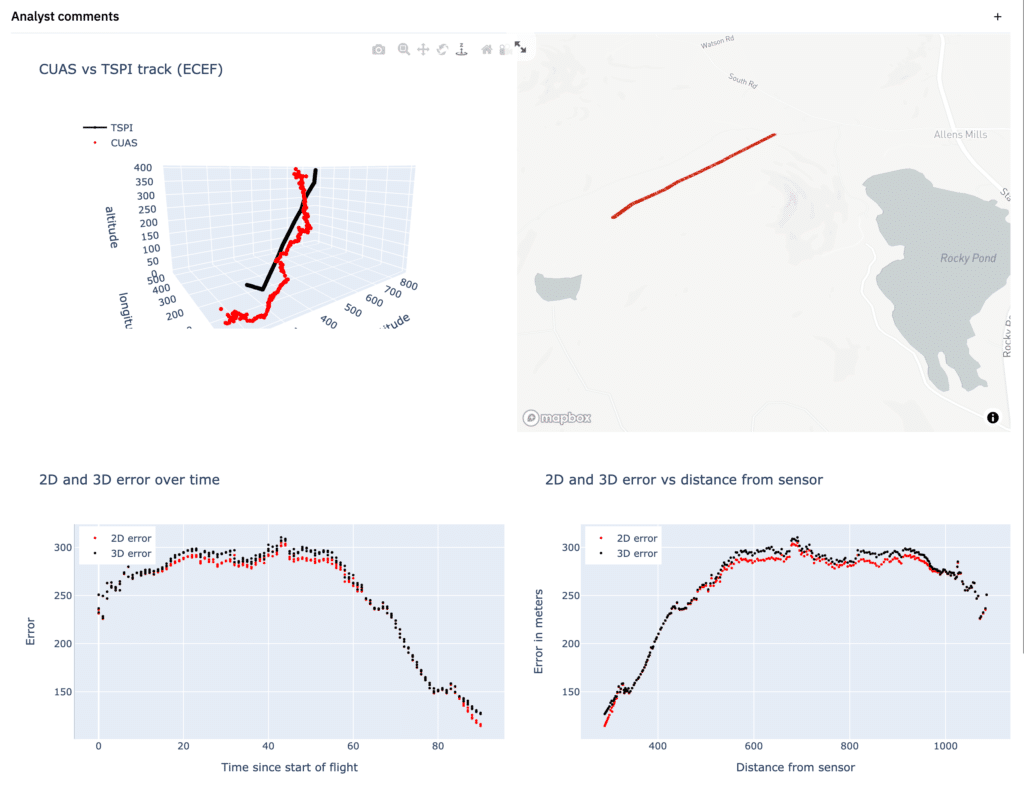

Or, in graphs, we want to be able to do this:

URSA has a long history of helping people make sense of UAS data. (Search for “David Kovar drone forensics” for examples.) We are applying our experience and desire to enable the community to the counter UAS space as well. This series of blog posts is intended to share some of our experience relating to extracting, organizing and analyzing data from counter UAS systems. Much of this experience came from test and evaluation exercises but it is applicable to anyone who needs to know how CUAS systems perform.

Who might be interested?

- CUAS evaluators require comprehensive data from systems, TSPI devices, targets, and other sources such as weather to accurately analyze and document systems’ performance during an exercise, and to be able to compare and contrast performance across exercises.

- Acquisition teams must know if a CUAS solution will solve their specific use cases – environment, threat, ROE – prior to investing resources in acquisition programs. Failure to do so will waste valuable time and limited funding. Failure to do so will ultimately cost lives.

- Investigators, prosecutors, and lawyers involved in criminal and civil investigations involving CUAS must know exactly what the system perceived during the course of the event, as well as what information was available to the operators of the system that led to any actions being taken.

- Regulators are turning to CUAS systems to better understand how manned and unmanned systems interact in the airspace. State and local governments use these systems to better understand in an objective manner how drones are operating over their citizens.

Anyone using CUAS systems or data should understand how the data is generated and what external and internal factors affect the system’s results before they can effectively use any data produced by these systems.

This series will explore many challenges facing the industry as we grapple with the need to demonstrate CUAS effectiveness in meaningful ways. We welcome everyone’s participation in the discussions.

The articles in this series are:

- Counter UAS Test and Evaluation Series

- Defining CUAS Effectiveness

- CUAS: TSPI Devices and Ground Truth

- CUAS: The Need for Human Observers

- CUAS: Data Sources and Their Strengths and Weaknesses

- CUAS Data: The Hardest Part – Collection, Organization, and Validation

- CUAS: Data Normalization

- CUAS: Data Visualization

- Comparing CUAS Track Data

- CUAS Test and Evaluation: URSA’s Journey

There is an enormous amount of work to be done on this topic, by URSA, by vendors, by standards committees, and by governments. We can collaboratively create an ecosystem where the effectiveness of CUAS systems against a variety of targets and in a variety of conditions is known in advance rather than after acquisition. But we need to talk, share data, conduct exercises where the results are made available to acquisitions teams and potential customers, and feed lessons learned back into the ecosystem for all to benefit from. This will meet some, perhaps significant, resistance but ultimately it is necessary for national security and the protection of life and property in general.